Table of Contents

- Fixing low performing blog posts systematically

- Writing an inefficient ETL-job in N8N (yes, inefficient)

- Filtering out low performer

- OnPage Checks - Or, rebuilding Yoast SEO the hard way

- Manual OnPage Checks vs. choosing the right backend

- The boring part: fixing my mistakes

- Conclusion: Why old systems are market leaders for a reason and no, AI won't replace content creators

Intro: Fixing low performing blog posts systematically

We have blog posts that rank well on Google and that show up on Perplexity and other LLMs. However, we also have 10+ posts with <100 views. In this post, I show our workflow how we are systematically trying to improve them. It involves;

- Bringing together Blog Posts, Google Analytics, und Google Console Daten

- OnPage analysis

- SEO analysis

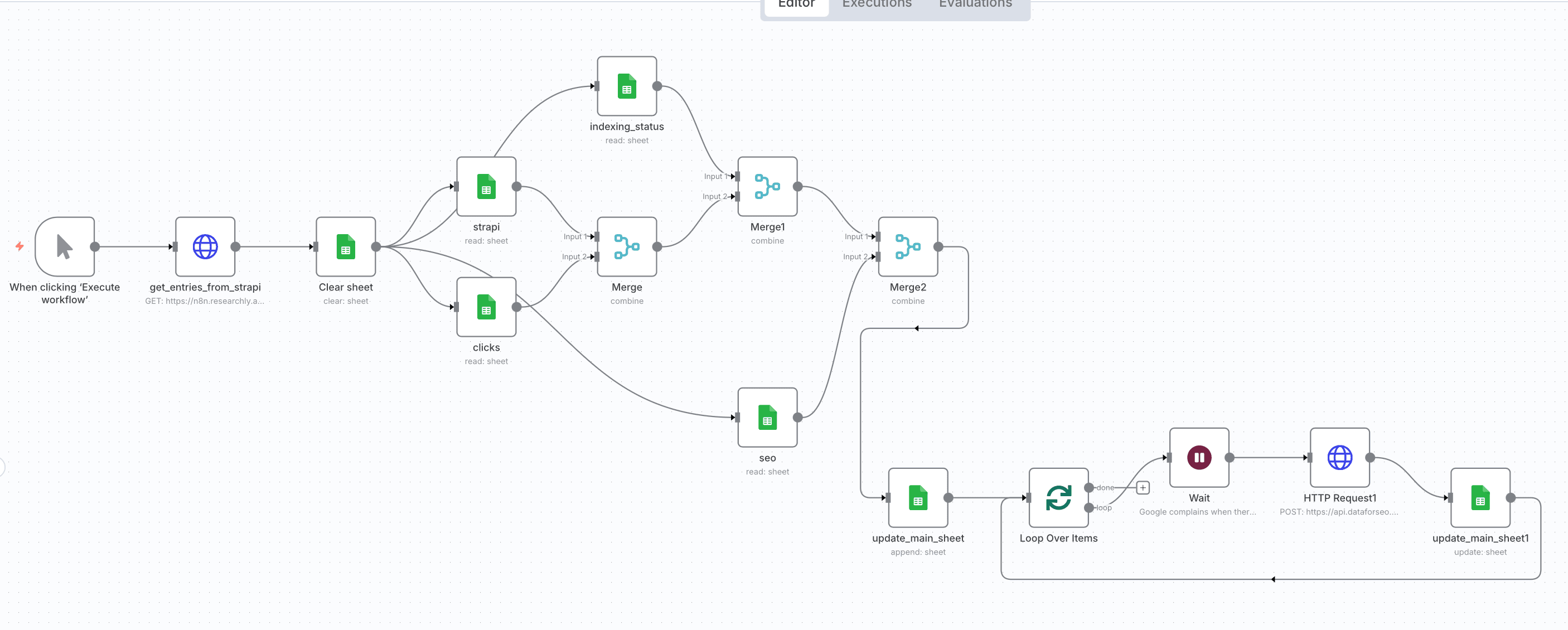

I have created an N8N-workflow which you can download here. Here is a walk-through:

Writing an inefficient ETL-job in N8N (yes, inefficient)

For the better half of my professional life, I have been building enterprise data warehouses. For each project I had a few core principles:

For the better half of my professional life, I have been building enterprise data warehouses. For each project I had a few core principles:

- separate business logic and data logic (With data logic I mean traditional ETL (getting the data from multiple sources, transforming them and loading into a centralized data structure). With business logic I refer to analyzing this data. )

- separate raw data from modified data (in data vault also called raw and business vault)

- choosing the right database based on the processing needs (OLAP vs. OLTP)

- using delta-loads to avoid time-consuming full-loads

With my current workflow, I ignored most of that :D. In this particular case, I separated the layers too, but kept it simple:

- all the data is stored in Google Sheets: long-term I wil store the data in a Postgres-DB

- no history: I do a full-load each time: if our content grows significantly, I will add delta-loading, but for now a full-load is ok.

Having said that, implementing the ETL in its current form is easy in N8N:

- use the HTTP-node to get the data from our CRM (Strapi): we use the same endpoint for serving the data on our blog. This is very convenient. One caveat is that there are some internal values per blog post which we do not expose. So to get these, I would need to use a different endpoint.

- Clear the main Google sheet to perform the full-load afterwards

- combine the data from different sheets into one master-sheet

- enrich each blog post with data from DataForSeo to obtain keyword volumes

There are a few caveats with that:

- The merge node only accepts two inputs, so you need to merge over multiple steps

- Google Sheets doesn’t like it when you query it too much, thus the wait node to avoid request timeouts

Filtering out low performer

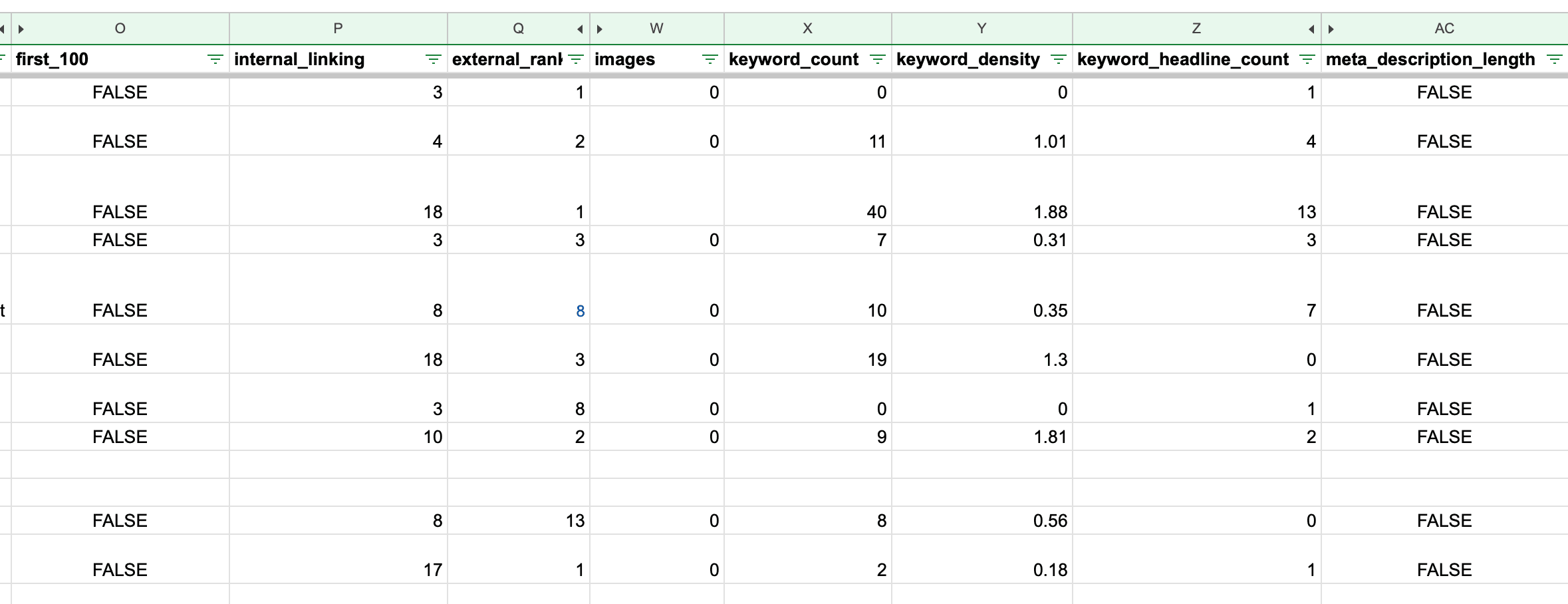

Once I have all the data in one sheet, I can start the actual checks. But firstly, I am filtering out low performers. For now this means filtering out articles with < 100 views. 100 views is a random threshold. In the future, I want to make it dynamic based on keyword volume. We also have some blog posts where I do not expect a lot of views in general because they do not serve SEO-purposes. Currently, I filter these out manually, but long-term I would need to classifiy them in Strapi first.

OnPage Checks - Or, rebuilding Yoast SEO the hard way

After filtering out good performing posts, the major part starts: performing the actual onpage checks. A lot of these checks are based on Yoast:

After filtering out good performing posts, the major part starts: performing the actual onpage checks. A lot of these checks are based on Yoast:

- Do I have enough internal links per post: we use DataForSEO for that

- Do I have enough external links per post: we use DataForSEO for that

- High enough keyword density: we calculate that using simple regex search. This works OK - it misses a few keywords here and there, but as SEO density is not an exact science it is ok.

- Meta Description long enough: this is hardcoded to 160 characters which seems to be a common practice

- Meta Description SEO-optimized: does the keyword appear in the first 10 characters

- Meta Title long enough and SEO-optimized: this is hardcoded to 160 characters which seems to be a common practice

- Meta Title SEO-optimized: does the keyword appear in the first 10 characters

As a result, I get a traffic light system like in the screenshot.

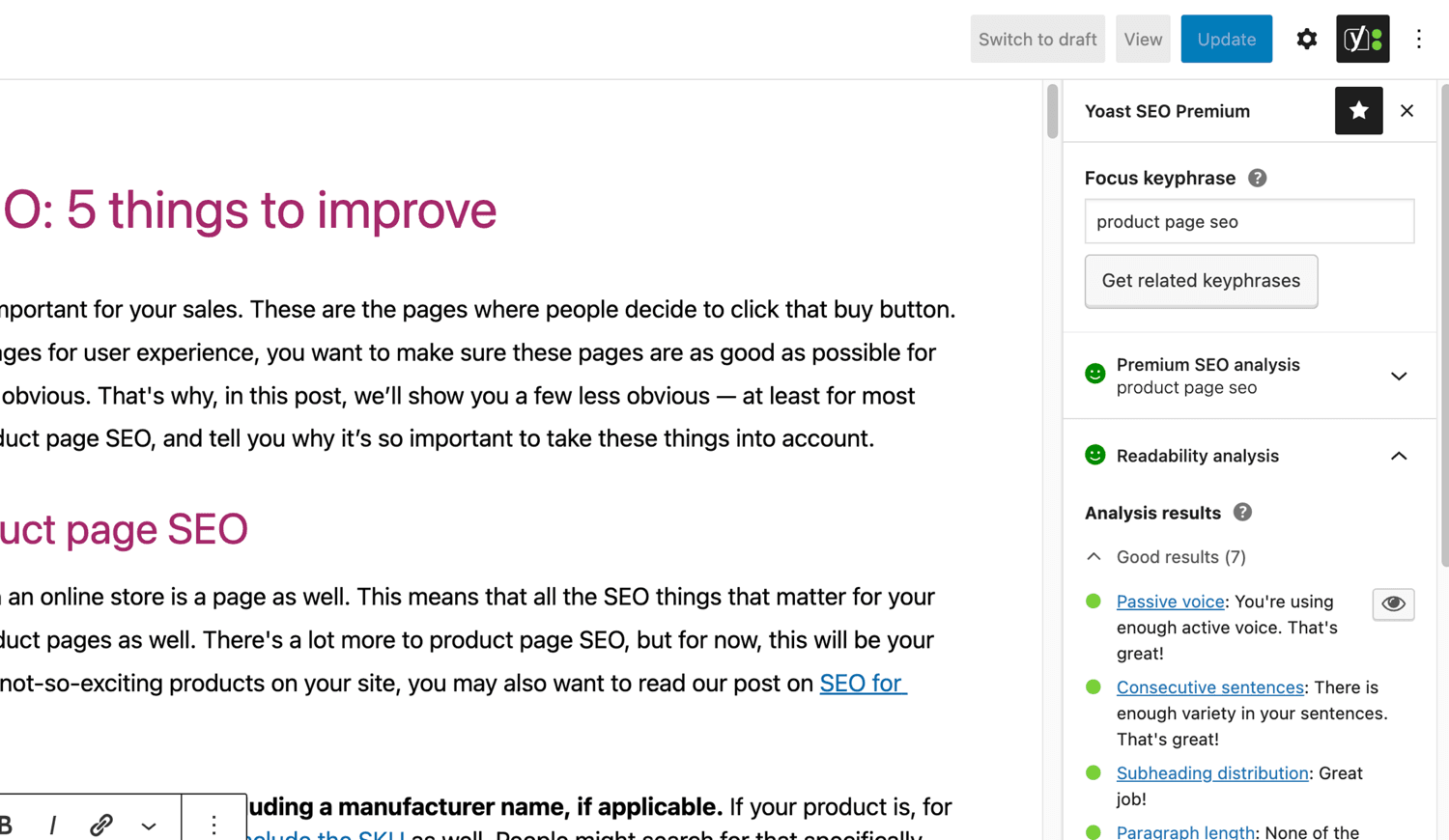

Manual OnPage Checks vs. choosing the right backend

As mentioned above, we use Strapi as our backend. And the thing that is missing is an integrated SEO-optimization. For instance, Yoast plugin in WordPress shows you in real time how well your post is optimized (see screenshot). In the long-run we probably should do something like that for our backend too. But as we are not super happy about Strapi, I unsure whether the investment makes sense.

As mentioned above, we use Strapi as our backend. And the thing that is missing is an integrated SEO-optimization. For instance, Yoast plugin in WordPress shows you in real time how well your post is optimized (see screenshot). In the long-run we probably should do something like that for our backend too. But as we are not super happy about Strapi, I unsure whether the investment makes sense.

The boring part: fixing my mistakes

Once I have a hypothesis why certain blogs might be underperforming, the manual work starts: rewriting title, content, adding links etc. There’s for sure a lot of automation potential here as well, but for now my focus on the points above.

Conclusion: Why old systems are market leaders for a reason and no, AI won't replace content creators

I wanted to write this post because it highlights a few things currently going on in tech:

- As I have outlined here, we decided against using Vibe Coding our website. One reason is that yes, you can get a good-looking site fast, but once you get into the workflow details—like SEO optimization—you realize that you need to build those yourself.

- WordPress and other established systems are market leaders for a reason. The more time I spend with Strapi, the more I think about going for a headless WordPress option. They offer a lot of cool features out of the box. And while plugins can break your system quickly, tools like Yoast SEO are genuinely useful.

- There’s a lot of talk about how AI will replace content creators. I don’t get this notion at all. I am constantly trying new models and tools for content creation but no tool performs consistently well. As such, it shouldn’t be surprising that OpenAI is hiring for a Content Strategist. Posts like this one are a great example of why that role is still important.

- Finally, building reliable and repeatable N8N workflows is hard—despite what everybody on LinkedIn is saying. What I am doing here barely scratches the surface of what’s necessary, and it still took me 16 full hours to finalize. I really do not see how "ChatGPT will replace content creators".